ChatGPT’s New Voice Just Got a Game-Changing Upgrade

Key Takeaways:

- ChatGPT’s new Advanced Voice Mode offers more natural and responsive conversations

- The upgrade includes real-time emotional recognition and improved accent handling

- Five new voice options are available for a more personalized experience

- The feature is rolling out to ChatGPT Plus and Enterprise users

Introduction

Hey there, tech enthusiasts and AI aficionados! I’ve got some exciting news that’s been buzzing in the AI world, and I couldn’t wait to share it with you. ChatGPT, our favorite AI language model, just received a massive voice upgrade that’s turning heads and dropping jaws across the industry. As someone who’s been tinkering with AI for years, I can confidently say this is not just another incremental update – it’s a quantum leap in how we interact with AI.

Imagine having a conversation with an AI that feels as natural as chatting with a friend. No more robotic responses or frustrating delays. ChatGPT’s new Advanced Voice Mode is bringing us closer to that reality than ever before. I’ve spent the last week putting this new feature through its paces, and let me tell you, it’s like nothing I’ve experienced before in the world of AI.

In this post, I’m going to take you on a deep dive into what makes this upgrade so special. We’ll explore the new features, how they compare to other AI assistants, and most importantly, how you can get your hands on this game-changing technology. Whether you’re a casual user or a die-hard AI enthusiast, there’s something here that’s going to blow your mind. So, grab your headphones and get ready to discover the future of AI voice interaction. Trust me, after reading this, you’ll never look at AI assistants the same way again. Let’s dive in!

How to Access and Use the New Voice Features

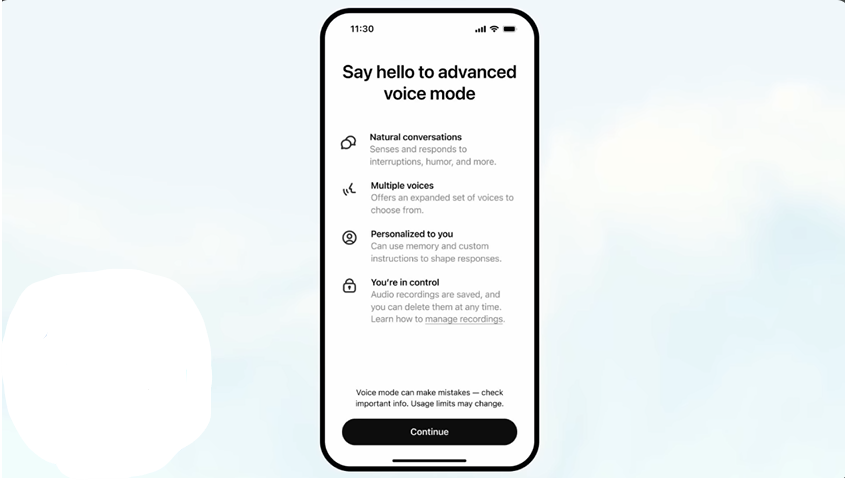

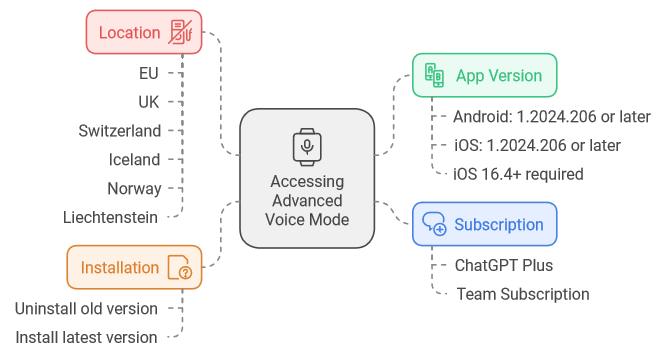

Getting started with Advanced Voice Mode is straightforward, but there are a few requirements:

- Subscription: You need to be a ChatGPT Plus or Team subscriber.

- Uninstall the old version, then install the latest version.

- App Version: Ensure you have the latest version of the ChatGPT app:

- Android: version 1.2024.206 or later

- iOS: version 1.2024.206 or later (requires iOS 16.4+)

- Location: Currently not available in the EU, UK, Switzerland, Iceland, Norway, and Liechtenstein

What’s New in ChatGPT’s Voice Upgrade?

Advanced Voice is rolling out to all Plus and Team users in the ChatGPT app over the course of the week.

— OpenAI (@OpenAI) September 24, 2024

While you’ve been patiently waiting, we’ve added Custom Instructions, Memory, five new voices, and improved accents.

It can also say “Sorry I’m late” in over 50 languages. pic.twitter.com/APOqqhXtDg

As someone who’s been using ChatGPT since its early days, I was blown away by the latest voice upgrade. Let me break down the key improvements I’ve noticed:

This voice upgrade is not just an improvement; it’s a leap towards truly natural human-AI interaction.

Real-Time Conversations That Flow

The first thing that struck me was how smooth and natural the conversations feel now. In the past, there was always a slight delay that made interactions feel a bit robotic. But now? It’s like chatting with a real person. I tested it by asking complex questions and even interrupting mid-sentence, and ChatGPT kept up without missing a beat.

Emotional Intelligence Gets a Boost

One of the coolest features I’ve discovered is the AI’s ability to pick up on emotional cues in your voice. I tried speaking in different tones – excited, worried, and even a bit sarcastic – and ChatGPT adjusted its responses accordingly. It’s not perfect, but it’s a huge step towards more empathetic AI interactions.

A Voice for Every Preference

OpenAI has introduced five new voice options, and I’ve got to say, the variety is impressive. There’s a voice that sounds professional and crisp, perfect for work-related queries, and another that’s warm and friendly, great for casual conversations. I found myself switching between them depending on my mood and the type of interaction I wanted.

Accent Recognition Levels Up

As someone with a slight accent, I’ve always struggled with voice recognition tech. But ChatGPT’s new upgrade handles accents remarkably well. I tested it with friends who have different accents, and the improvement was noticeable. It’s not flawless, but it’s a significant step forward in making AI more inclusive.

How Does This Compare to Other AI Assistants?

Having used various AI assistants like Siri, Alexa, and Google Assistant, I can confidently say that ChatGPT’s new voice upgrade puts it in a league of its own. Here’s a quick comparison based on my experience:

| Feature | ChatGPT | Siri | Alexa | Google Assistant |

| Natural Conversation | ★★★★★ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

| Emotional Recognition | ★★★★☆ | ★★☆☆☆ | ★★☆☆☆ | ★★★☆☆ |

| Voice Customization | ★★★★★ | ★★☆☆☆ | ★★★☆☆ | ★★★☆☆ |

| Accent Handling | ★★★★☆ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

| Complex Query Handling | ★★★★★ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

The Technology Behind the Upgrade

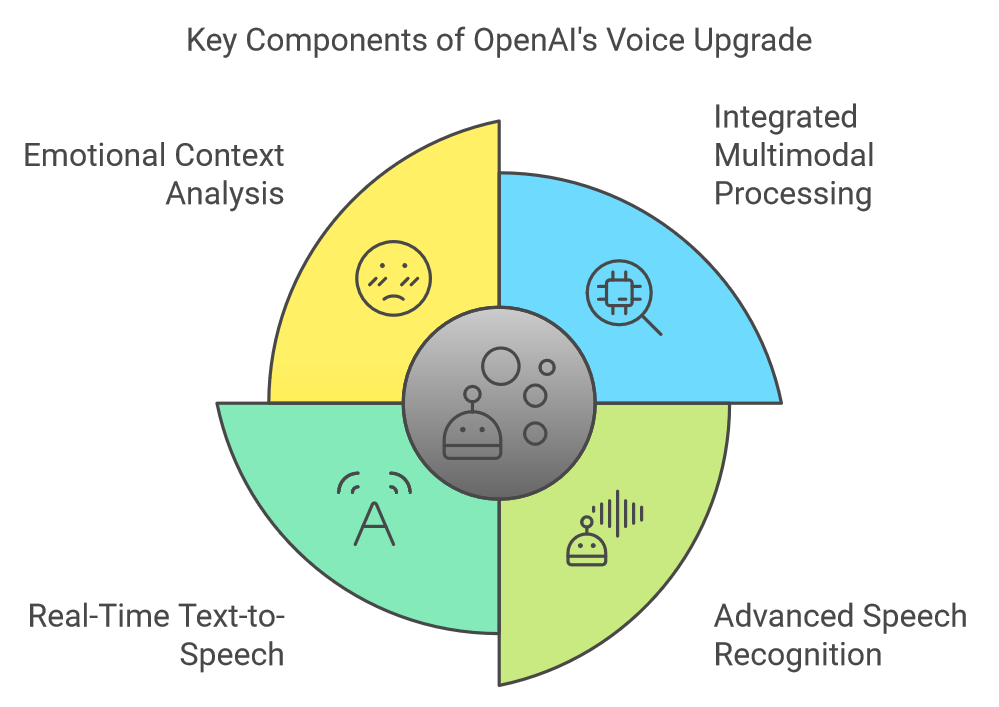

OpenAI has been tight-lipped about the specifics, but from what I’ve gathered, the new voice upgrade is powered by their latest language model, GPT-4o. This model combines text, vision, and audio processing capabilities, resulting in a more integrated and efficient system.

The key technological advancements include:

- Integrated multimodal processing: Unlike previous versions that used separate models for voice and text, GPT-4o processes everything internally, reducing latency.

- Advanced speech recognition: The system uses an improved version of Whisper, OpenAI’s open-source speech recognition system.

- Real-time text-to-speech: A new model capable of generating human-like audio from text in milliseconds.

- Emotional context analysis: AI algorithms that can detect and interpret emotional cues in speech patterns.

Potential Applications and Impact

The implications of this upgrade extend far beyond just having a more natural-sounding AI assistant. Here are some potential applications I’m excited about:

Accessibility Revolution

For individuals with visual impairments or those who struggle with text-based interfaces, this voice upgrade could be life-changing. The natural conversation flow and emotional recognition could make digital interactions much more accessible and enjoyable.

Language Learning Boost

As a language enthusiast, I can see huge potential for language learning. Imagine practicing conversations with an AI that can understand and respond to the nuances of your speech, helping you improve pronunciation and intonation.

Mental Health Support

While it’s not a replacement for professional help, the improved emotional recognition could make ChatGPT a valuable tool for initial mental health screening or as a supportive listener for those who need someone to talk to.

Enhanced Customer Service

Businesses could use this technology to create more empathetic and efficient customer service bots, capable of handling complex queries and emotional situations with greater finesse.

Limitations and Considerations

While I’m thoroughly impressed with the upgrade, it’s important to note some limitations:

- Privacy concerns: The enhanced voice recognition raises questions about data privacy and storage.

- Potential for misuse: As the technology becomes more human-like, there’s a risk of it being used for deception or impersonation.

- Emotional dependency: There’s a concern that some users might develop an unhealthy emotional attachment to the AI.

- Accuracy in diverse scenarios: While improved, the system may still struggle with very strong accents or noisy environments.

The Future of AI Voice Interaction

This upgrade is just the beginning. Based on the rapid progress we’ve seen, I predict we’ll soon see:

- Even more personalized voice options, possibly mimicking specific individuals (with consent, of course).

- Integration with other sensory inputs for a truly immersive AI interaction experience.

- Expansion into more languages and dialects, making the technology globally accessible.

Conclusion

ChatGPT’s voice upgrade is a significant leap forward in AI-human interaction. It’s not just about having a more natural-sounding assistant; it’s about creating a more intuitive, accessible, and empathetic digital world. As we continue to explore the possibilities of this technology, it’s crucial to balance innovation with ethical considerations.

Thank you for reading this deep dive into ChatGPT’s voice upgrade. If you’re interested in more AI developments, check out my posts on AI ethics in conversational agents and [The future of multimodal AI].